June 24, 2025

How to Use LiteLLM to Reduce AI Development Costs for SMBs

Imagine you're part of a lean startup team working on an AI-powered product. You've got one foot in OpenAI and are just dipping your toes into Claude while your CTO keeps raving about Mistral or Groq. Managing all these model APIs can get messy—this is where LiteLLM comes in. Between OpenAI’s GPT-4, Claude from Anthropic, and open-source options like Mistral or LLaMA 3, each model excels in different tasks—from summarization to code completion to multilingual response generation. With LiteLLM, you can standardize and switch between these providers effortlessly, without rewriting your codebase.

But this flexibility comes at a cost:

1. Separate SDKs and APIs

2. Inconsistent input/output formats

3. Custom error handling logic per provider

4. Separate token and billing analytics

5. Increased latency troubleshooting

These are engineering challenges that grow with your model count. And that’s exactly where LiteLLM comes in.

The Problem with Multi-Model Madness

Most startups want two things: fast iteration and freedom from vendor lock-in. But the moment you start using multiple LLM providers, be it OpenAI, Azure, Anthropic, or Hugging Face, you’re just juggling different APIs, different rate limits, and different quirks.

And guess what? That complexity doesn’t scale.

You’re either writing brittle wrapper code that breaks every time an API hiccups, or worse, committing to one provider and praying they don’t raise prices next month.

What is LiteLLM?

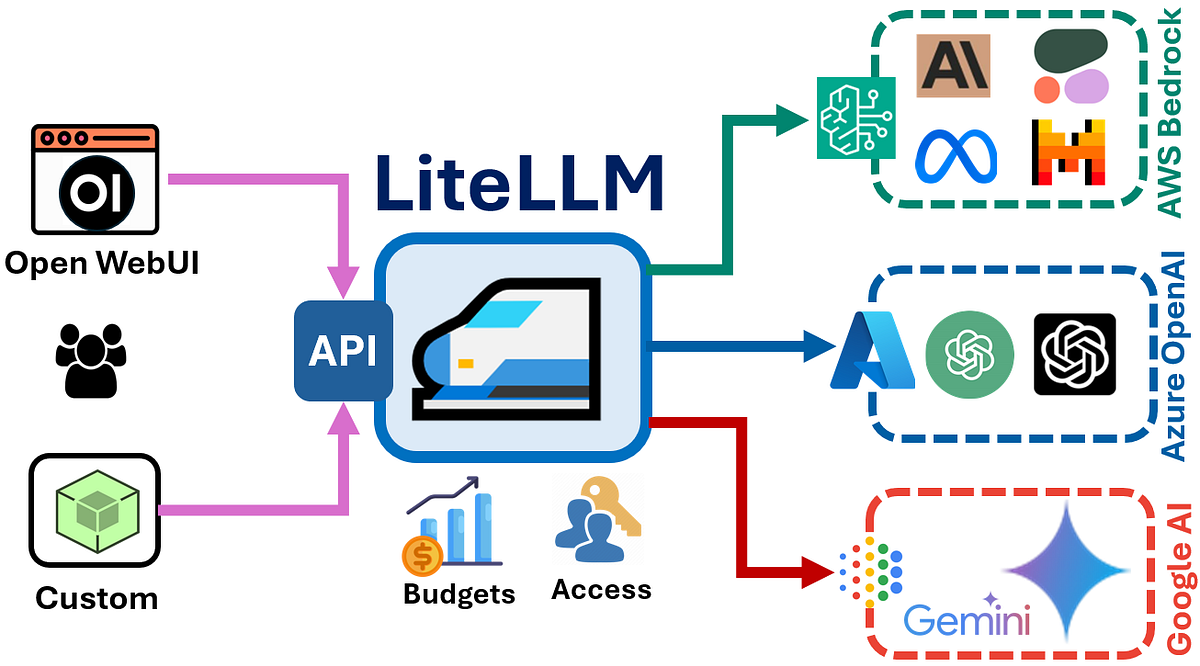

LiteLLM is an open-source LLM proxy and routing layer that simplifies inference calls across multiple LLMs via a single OpenAI-compatible API. It enables your infrastructure to remain provider-agnostic while offering observability, failover, caching, and cost control.

Supported Providers (as of now):

1. OpenAI

2. Azure OpenAI

3. Anthropic (Claude)

4. Cohere

5. Hugging Face (incl. Inference Endpoints)

6. Perplexity

7. Aleph Alpha

8. Together AI

9. Ollama (local models)

10. LMStudio

11. Mistral, Groq, Google Gemini (via beta integrations)

Architectural Overview

Client Code

↓

LiteLLM Proxy —> OpenAI

| → Anthropic

| → Ollama (local inference)

| → Azure

↓

Observability + Load Balancer + Caching + Cost Tracking

LiteLLM exposes the same API structure as OpenAI's /v1/chat/completions, so your front-end or backend inference logic doesn’t have to change when switching providers.

Why It Matters for Startups

Unified API, Reduced Integration Time

No need to maintain five different wrappers and providers. With LiteLLM, your code interacts with one consistent schema—less glue code, fewer edge-case bugs

# Traditional integration

openai.ChatCompletion.create(...)

anthropic.Client.create(...)

# LiteLLM integration

openai.ChatCompletion.create(base_url="http://localhost:4000", ...)

Dynamic Routing and Failover

Let’s say OpenAI’s rate limit hits you mid-request. LiteLLM can automatically fall back to Claude or Mistral with configurable retry policies. This is critical for production workloads

curl http://localhost:4000/v1/chat/completions \

-H "Authorization: Bearer $API_KEY" \

-d '{"model": "gpt-4", "messages": [...]}'

If GPT-4 fails? LiteLLM retries with Claude or another fallback model.

Built-in Observability & Cost Analytics

LiteLLM logs:

1. Model used

2. Latency per request

3. Tokens in/out

4. Cost per request

You get CSV exports and a dashboard. Ideal for tracking model usage across experiments and demos.

GET /llm/logs

GET /llm/costs

No more approximating cost in spreadsheets.

Supports Local + Cloud Hybrid Inference

You can route high-priority or sensitive inference to local models via Ollama while experimenting with remote models. This opens doors for:

- Hybrid deployment strategies

- Data residency compliance

- Cost-effective RAG architectures

Security & Compliance Considerations

LiteLLM supports local logging, meaning inference logs and token data stay within your chosen environment. For teams handling sensitive data, you can:

- Route PII-sensitive queries to local inference endpoints (e.g., Ollama)

- Set strict provider allow/block lists

- Configure logging behavior to exclude message content

LiteLLM doesn’t claim SOC 2 or ISO-27001 itself but can be deployed within certified infrastructures. Include LiteLLM configurations in your security review checklist to ensure full compliance.

Real-World Example: Fast Deployment with Flexibility

Scaling AI at a BFSI Mid-Market Org

A 2,000-employee BFSI firm adopted LiteLLM to balance inference loads between OpenAI and Hugging Face endpoints. By routing routine requests to local models and reserving premium tokens for high-stakes workflows, they reduced GPU-related costs by 25% while meeting internal audit and residency standards.

LiteLLM vs LangChain vs OpenLLM

Feature | LiteLLM | LangChain | OpenLLM |

Unified Chat API | Yes | Partially (LCEL router since Mar 2025) | No |

OpenAI Compatible | Yes | No | No |

Load balancing | Yes | Nil | Yes |

Local + Cloud Support | Yes | Yes | Yes |

Simple to Deploy | Yes (1 file) | Complex | Docker – heavy |

Logs & Cost Insights | Yes | Nil | Nil |

Final Thoughts: Use the Best Model, Not the Same Model

As startups continue to navigate an increasingly interconnected global market, solutions like LiteLLM play a pivotal role in bridging the gap between sophisticated AI techniques and practical, real-world applications. With its user-friendly approach and geo-optimized capabilities, LiteLLM is not just a technological innovation—it’s a catalyst for change that’s empowering startups to redefine what’s possible, everywhere.

With model proliferation accelerating, you don’t want your infrastructure glued to a single vendor. LiteLLM lets you keep your stack flexible, cost-aware, and scalable—exactly what investors and engineers want to hear.

Bonus: Getting Started with LiteLLM (1 min)

pip install litellm

litellm --port 4000

Then change your OpenAI base URL to http://localhost:4000.

- LangChain

- LiteLLM

- OpenAI

Want This Done the Smart Way

Similar Blogs

Similar Blogs

Want This Done the Smart Way?

Let's Connect

Ready to get started?

We'd love to talk about how we can work together

AWS CLOUDCOST

Take control of your AWS cloud costs that enables you to grow!